Gzip compressed league files

This is a small feature, but it gets a blog post because it needs a little explanation.

The Export Leauges screen now has an option to apply gzip compression to league files, and you can now import gzip compressed league files.

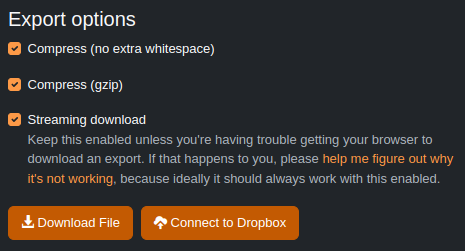

This is what the option looks like (except for a few of you using old browsers that don't support it):

When you enable the "Compress (gzip)" option, the file you get will be a ".json.gz" file rather than just ".json". Then you can use that compressed file directly within BBGM anywhere you would have previously used a .json file, or (if you want to get the raw JSON file inside it) you can extract it. I believe all modern operating systems have native support for extracting .gz files, but if yours doesn't, you can find some free software out there to do it.

This feature is particularly handy if you're sharing the file online somewhere and either a large file is slow to work with, or a large file takes a long time to upload/download. So for people using the Dropbox export feature, I think a lot of you might want to enable gzip compression and take advantage of the smaller file sizes.

How much smaller? With the "Compress (no extra whitespace)", gzip files are about 1/3 the size of raw files. Without that other compression setting enabled, the difference is even larger, as gzip can at least somewhat compress the whitespace.

There is some performance cost for importing/exporting leagues, but not much. In my testing, importing a gzipped league file is about 10% slower. And exporting is actually faster - I'm not entirely sure why, but I guess if the compression is happening faster than data is being written to disk/network, then it'll be faster.

Why gzip? Why not a normal zip file?

In normal zip files, there is a header in the file (meaning a section at the start of the file) containing metadata about the contents of the archive, including how big the compressed files are. That's not a huge problem for most software... you can just compress the files first and then write the header later. But for software running in a browser, that's a problem. There's no good way to go back and edit the header of the file after you've written some other data to disk already (okay technically you can in Chrome, but not in other browsers). So I think the only option would be to either keep the compressed file in memory until it's done (not feasible for BBGM because the files can be huge!) or run the compression twice (once to see how big it is, then write the header, then compress again while actually writing to disk) - but that would be twice as slow.

On the other hand, gzip doesn't have this problem, it just works out-of-the-box in a couple lines of code. That "out-of-the-box" part is also why I'm using gzip and not any other more modern compression algorithm. Browsers have native support for gzip, but not for any fancy new compression algorithm. Maybe some day they will, and then I can use this same approach to get even better performance.

But for now, it's just gzip, and I think that's still a pretty good improvement for people who are often exporting/importing large leagues.